An nginx HTTP-to-HTTPS Redirect Mystery, and Configuration Advice

I noticed a weird thing last night on an nginx server I administer. The logs were full of lines like this:

42.232.104.114 - - [25/Mar/2018:04:50:49 +0000] "GET http://www.ioffer.com/i/new-fashion-fine-gold-bracelet-versaec-bracelet-641175733 HTTP/1.1" 301 185 "http://www.ioffer.com/" "Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.0; Hotbar 4.1.8.0; RogueCleaner; Alexa Toolbar)"Traffic was streaming in continuously: maybe ten or twenty requests per second.

At first I thought the server had been hacked, but really it seemed people were just sending lots of traffic and getting 301 redirects. I could reproduce the problem with a telnet session:

$ telnet 198.51.100.42 80

Trying 198.51.100.42...

Connected to example.com.

Escape character is '^]'.

GET http://www.ioffer.com/i/men-shouder-messenger-bag-briefcase-shoulder-bags-649303549 HTTP/1.1

Host: www.ioffer.com

HTTP/1.1 301 Moved Permanently

Server: nginx/1.10.1

Date: Sun, 25 Mar 2018 04:56:06 GMT

Content-Type: text/html

Content-Length: 185

Connection: keep-alive

Location: https://www.ioffer.com/i/men-shouder-messenger-bag-briefcase-shoulder-bags-649303549

<html>

<head><title>301 Moved Permanently</title></head>

<body bgcolor="white">

<center><h1>301 Moved Permanently</h1></center>

<hr><center>nginx/1.10.1</center>

</body>

</html>In that session, I typed the first two lines after Escape character..., plus the blank line following. Normally a browser would not include a whole URL after GET, only the path, like GET /about.html HTTP/1.1, but including the whole URL is used when going through a proxy. Also it may be possible to leave off the Host header. Technically it is required for HTTP/1.1, so I added it just out of habit. I didn’t test without it.

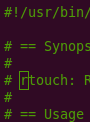

So what was happening here? I was following some common advice to redirect HTTP to HTTPS, using configuration like this:

server {

listen 80;

server_name example.com *.example.com;

return 301 https://$host$request_uri;

}The problem is the $host evaluates to whatever the browser wants. In order of precedence, it can be (1) the host name from the request line (as in my example), (2) the Host header, or (3) what you declared as the server_name for the matching block. A safer alternative is to send people to https://$server_name$request_uri. Then everything is under your control. You can see people recommending that on the ServerFault page.

The problem is when you declare more than one server_name, or when one of them is a wildcard. The $server_name variable always evaluates to the first one. It also doesn’t expand wildcards. (How could it?) That wouldn’t work for me, because in this project admins can add new subdomains any time, and I don’t want to update nginx config files when that happens.

Eventually I solved it using a config like this:

server {

listen 80 default_server;

server_name example.com;

return 301 https://example.com$request_uri;

}

server {

listen 80;

server_name *.example.com;

return 301 https://$host$request_uri;

}Notice the default_server modifier. If any traffic actually matches *.example.com, it will use the second block, but otherwise it will fall back to the first block, where there is no $host variable, but just a hardcoded redirect to my own domain. After I made this change, I immediately saw traffic getting the redirect and making a second request back to my own machine, usually getting a 404. I expect pretty soon whoever is sending this traffic will knock it off. If not, I guess it’s free traffic for me. :-)

(Technically default_server is not required since if no block is the declared default, nginx will make the first the default automatically, but being explicit seems like an improvement, especially here where it matters.)

I believe I could also use a setup like this:

server {

listen 80 default_server;

return 301 "https://www.youtube.com/watch?v=dQw4w9WgXcQ";

}

server {

listen 80;

server_name example.com *.example.com;

return 301 https://$host$request_uri;

}There I list all my legitimate domains in the second block, so the default only matches when people are playing games. I guess I’m too nice to do that for real though, and anyway it would make me nervous that some other misconfiguration would activate that first block more often than I intended.

I’d still like to know what the point of this abuse was. My server wasn’t acting as an open proxy exactly, because it wasn’t fulfilling these requests on behalf of the clients and passing along the response (confirmed with tcpdump -n 'tcp[tcpflags] & (tcp-syn) != 0 and src host 198.51.100.42'); it was just sending a redirect. So what was it accomplishing?

The requests were for only a handful of different domains, mostly Chinese. They came from a variety of IPs. Sometimes an IP would make requests for hours and then disappear. The referrers varied. Most were normal, like Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Trident/5.0), but some mentioned toolbars like the example above.

I guess if it were DNS sending them to my server there would (possibly) be a redirect loop, which I wasn’t seeing. So was my server configured as their proxy?

To learn a little more, I moved nginx over to port 81 and ran this:

mkfifo reply

netcat -kl 80 < reply | tee saved | netcat 127.0.0.1 81 > reply(At first instead of netcat I tried ./mitmproxy --save-stream-file +http.log --listen-port 80 --mode reverse:http://127.0.0.1:81 --set keep_host_header, but it threw errors on requests with full URLs (GET http://example.com/ HTTP/1.1) because it thought it should only see those in regular mode.)

Once netcat was running I could tail -F saved in another session. I saw requests like this:

GET http://www.douban.com/ HTTP/1.1

User-Agent: Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Trident/5.0)

Accept: text/html, */*

Accept-Language: zh-cn; en-us

Referer: http://www.douban.com/

Host: www.douban.com

Pragma: no-cacheI also saw one of these:

CONNECT www.kuaishou.com:443 HTTP/1.0

User-Agent: Opera/9.80 (Windows NT 6.1; U; en) Presto/2.8.131 Version/11.11

Host: www.kuaishou.com:443

Content-Length: 0

Proxy-Connection: Keep-Alive

Pragma: no-cacheThat is a more normal proxy request, although it seems like it was just regular scanning, because I’ve always returned a 400 to those.

Maybe the requests that were getting 301‘d were just regular vulnerability scanning too? I don’t know. I seemed like something more specific than that.

The negatives for me were noisy logs and elevated bandwidth/CPU. Not a huge deal, but whatever was going on, I didn’t want to be a part of it.

. . .

By the way, as long as we’re talking about redirecting HTTP to HTTPS, I should mention HSTS, which is a way of telling browsers never to use HTTP here in the future. If you’re doing a redirect like this, it may be a good thing to add (to the HTTPS response, not the HTTP one). On the other hand it has some risks, if in the future you ever want to use HTTP again.

blog comments powered by Disqus Prev: Adding an if method to ActiveRecord::Relation Next: Counting Topologically Distinct Directed Acyclic Graphs with MarshmallowsCode

Writing

Talks

- Papers We Love: Temporal Alignment

- Benchbase for Postgres Temporal Foreign Keys

- Hacking Postgres: the Exec Phase

- Progress Adding SQL:2011 Valid Time to Postgres

- Temporal Databases: Theory and Postgres

- A Rails Timezone Strategy

- Rails and SQL

- Async Programming in iOS

- Errors in Node.js

- Wharton Web Intro

- PennApps jQuery Intro